Slurm Partitions(The general analysis division)

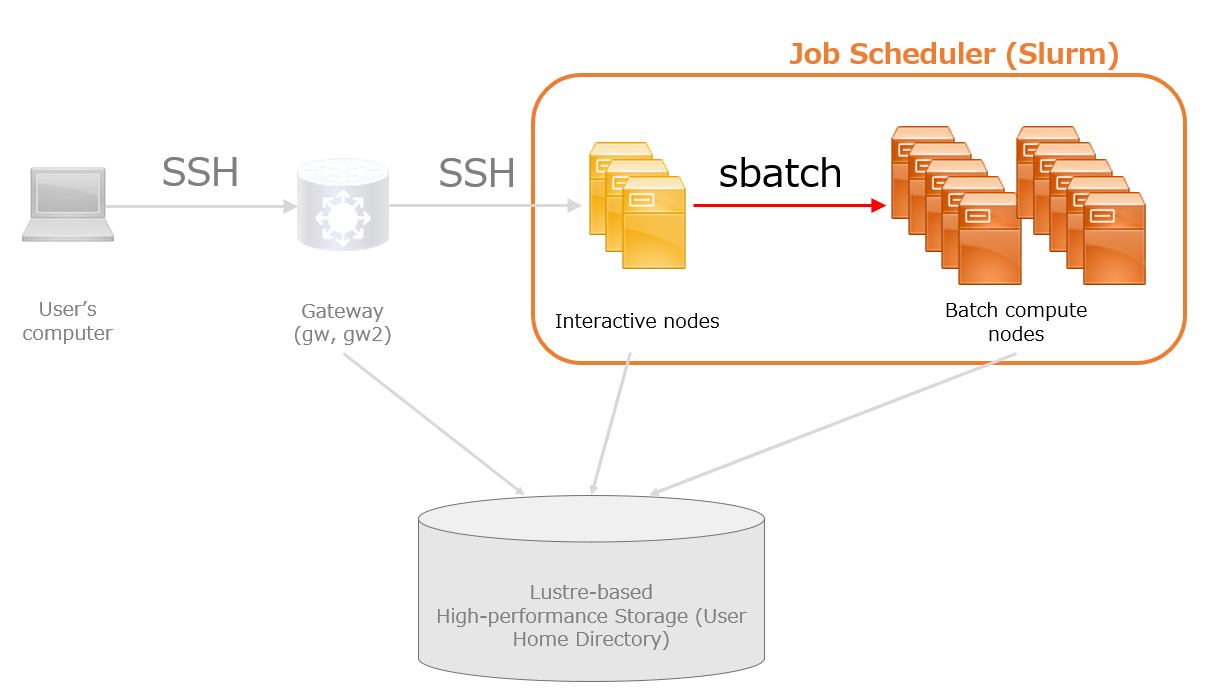

The compute nodes managed by the Slurm job scheduler are broadly categorised into interactive nodes and batch compute nodes.

- Interactive nodes are computing environments designed for users to carry out program development or to perform small-scale, short-duration tasks in an interactive, real-time manner.

- Batch compute nodes are intended for long-running jobs and computational tasks that require large amounts of CPU or memory resources.

In Slurm, jobs are requests for computation submitted to either interactive or batch compute nodes, and these are managed using partitions. If a job requests more resources than are currently available, it is placed in a queue within the appropriate partition. The job will be executed automatically by Slurm once sufficient resources become available.

In the general analysis division of the NIG Supercomputer, Slurm partitions are defined based on the type of compute node used in each system configuration.

| Node Type | Slurm Partition Name | Hardware Specification | Number of Nodes / Total CPU Cores |

|---|---|---|---|

| Interactive Node | login | HPC CPU Optimised Node Type 1 | 3 nodes / 576 cores |

| Batch Compute Nodes | epyc | HPC CPU Optimised Node Type 1 | 12 nodes / 2304 cores |

| rome | HPC CPU Optimised Node Type 2 | 9 nodes / 1152 cores | |

| short.q | HPC CPU Optimised Node Type 1 | 1 node / 192 cores | |

| medium | Memory Optimisation Node Type 2 | 2 nodes / 384 cores |

For details on how to use Slurm, refer to 'How to use Slurm' in the Software section.